I read something recently that I’ve been revisiting over the past few weeks. It was Niko McCarty’s piece in Asimov Press, reflecting on the physician Lewis Thomas’s “Seven Wonders.”

If you haven’t read the piece, I highly recommend you do. It’s a beautifully rendered exploration of progress, discovery, and the strangeness of life.

But two parts in particular struck me. The first describes a characteristic of E. coli, an organism with no mind, no model, yet somehow capable of directional behaviour. “Life is not machine-like,” McCarty writes, “but amorphous and stochastic… and yet, even the simplest of organisms—like E. coli—is capable of purposeful intelligence.”

The second offers a kind of design principle the refined systems, like E.Coli, that we find over and over again throughout nature:

“There is a slope toward elegance in biology… Natural creations, shaped by evolution over eons, can accomplish tasks in ways that human-made tools can still only dream of matching.”

This essay didn’t start with bacteria, but with human-made tools. It began with machines, and a question we’ve been circling at ReGen for months:

How do we build intelligence that holds up in a changing world with infinite possibilities — artificial intelligence built to listen, adapt, and discover something new and meaningful?

At first glance, it seems like we’re already doing it. But today’s AI models are brittle and hitting diminishing returns. As ARIA recently noted: for the first time in computing history, improving performance requires exponentially more energy. The economics of Moore’s Law are broken. And the performance delta isn’t abstract—a single ChatGPT session consumes ~150x more energy than the human brain performing all its functions.1

That’s why we’re starting here. Not with a grand thesis, but with a close look at the simplest intelligences we already know—and what they might still teach us. Because the more we uncover about the world, the more we find solutions etched by constant flux and irreversible consequence. And the more we learn, the more the intelligence we build begins to resemble the world that gave rise to it in the first place.

Descending down the Slope towards Elegance leads you to something broader than just biological ingenuity.

The closer we get to understanding life, and the better we get at learning from it, the simpler and more durable our solutions can become, because the most resilient forms are those that have been shaped by time, tested by feedback, and etched by irreversible consequence.2

In other words, complexity isn’t what it takes to endure. Endurance is what it takes to build complexity.

This isn’t a new idea. In 1943, Erwin Schrödinger, stood up in Dublin to give a series of lectures that would eventually become a slim book called What Is Life?

Why ask that as a quantum physicist? Because according to the physics of Schrödinger time, life shouldn’t do much at all. Matter tends toward equilibrium—as quickly as physical constraints allow, often along the steepest gradient. A hot mug cools until it matches the room. Energy disperses. Systems decay. That’s the prevailing expectation.

But living matter, Schrödinger wrote, “differs so entirely from that of any piece of matter that we physicists and chemists have ever handled physically in our laboratories or mentally at our writing desk… through avoiding the rapid decay into the inert state of 'equilibrium,' an organism appears so enigmatic.”

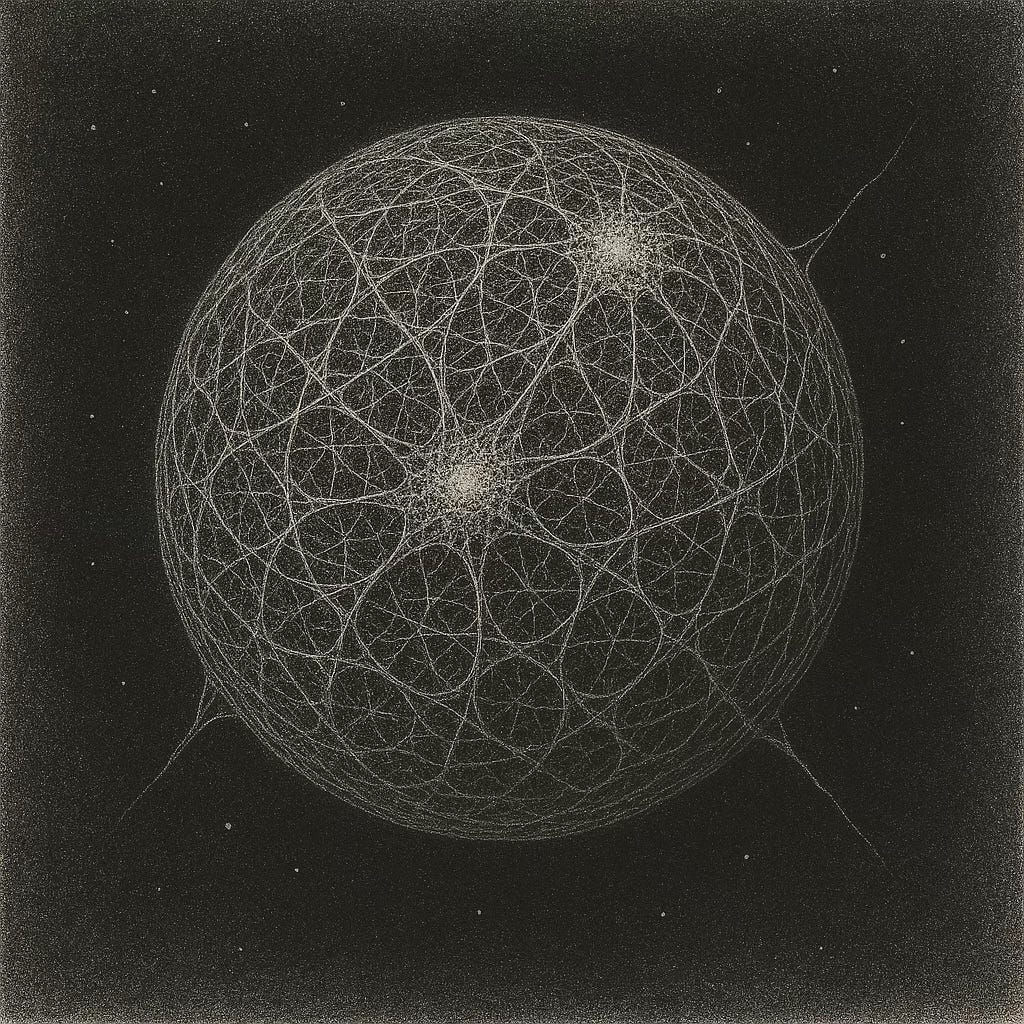

In other words, life still obeys thermodynamic laws, but it behaves differently. It descends into entropy, but not in a straight line. It grows, mutates, divides. At its core, a living system is an interface with disorder. It doesn’t resist disorder; it survives by riding the edge of it — close enough to respond, far enough to not fall apart.

Ilya Prigogine later gave the name for the order that emerges throughout existence in constant flux: dissipative structures.

These are patterns that emerge not despite instability, but because of it—systems sustained by energy flow, refined by interaction with chaos, and preserved only when they manage disorder well. They don’t resist change; they metabolise it. Beneath the surface complexity, they are often built from simple, recursive rules, looped and layered to manage energy and information with as little loss as possible.

These core patterns—ones that minimise error, resist collapse, and stay just far enough from equilibrium to remain alive—show up everywhere. From storm systems to photosynthetic webs, ocean eddies to metabolic loops, nature keeps settling into forms that are stable, scalable, and minimal. The structures may be intricate, but they don’t persist because they’re elaborate. They persist because they’ve been carved by the same shared chaos, and once a system survives, it carries a memory of that survival forward. It can’t go back. In a world that constantly changes, what lasts must be able to change with it. That’s what irreversibility means: survival sets a direction.

This is what intelligence looks like before it thinks.

Form isn’t layered on top of function—it is function. Intelligence, here, is structure: one continuous loop, shaped by contact and preserved by consequence. Not perfect design, but something better: what has encountered the unknown and survived.

Artificial Intelligence should scale in the same way: not by outrunning planetary constraints, but by metabolising them. Not by escaping the world or change, but by becoming aligned with it. Nature has done this for three billion years. We’re just starting to listen. Intelligence that, like life, builds coherence through feedback. That discovers familiar structure under pressure. That generates explanations that hold up—not just in theory, but in the world.

That’s why we are so convicted that AI can do so much more than automate or optimize. We believe it can help us uncover better explanations of the world and use that inherited evolutionary or planetary intelligence to build better everything, without compromise. A kind of intelligence that pushes us towards explanations that are not only more elegant, but more aligned with energy, with matter, with the feedback loops that make survival and learning possible.

Back to the E.Coli. If intelligence built in the model of refined sufficiency, generalisable enough to confront instability, this is one of its “most elemental forms.”

We don’t know that E.Coli is intelligent thanks to witnessing it reasoning, planning, or reflecting. We know because E.Coli can swim toward food. It senses chemical gradients in its environment and moves accordingly. When conditions improve, it continues forward; when they deteriorate, it reorients and tries again.

This loop of sensing and swimming isn’t conscious, but it is computational: a real-time, embodied feedback system that preserves function under fluctuating conditions. E. coli doesn’t separate thought from motion. It doesn’t think, and doesn’t need to. A system like that doesn’t need to predict the future to generalise. It just needs to remain coupled to the present and turn in circles when it can’t find food.

What’s most remarkable is not just the mechanism, but its combined simplicity and sufficiency. Every failure to respond to feedback, confront instability, and manage failure was filtered out by who survived, and who didn’t. What remains isn’t designed, it’s what pressure didn’t break: structure distilled by feedback, not foresight.

Form and function are inseparable.3 Learning begins in correction, not accumulation. That’s why we privilege the bacterium.

The lesson is that intelligence begins not from what you know, but from how you respond when you're wrong, or when you didn’t know in the first place.Consider again Schrödinger and Prigogine.

Their work did not just explain how life maintains form against entropy. It challenged the models that failed to account for it. They did not reshape nature to fit existing abstractions. They let nature reshape the abstraction. By listening closely to a contradiction—by treating resistance not as noise, but as signal—they revealed something fundamental about how systems endure.

Intellectual ability, and fundamental breakthroughs — rupture — is a triumph of remaining in conversation with a changing world. Minds, scientists, organisms that persist against entropy do not survive flux by avoiding it or writing it off. The universe doesn’t care how elegant your theory is. It rewards structure that can absorb instability and pressure. If you're brittle, you break. If you're plastic, you flex, and over time, that plasticity becomes a record of your ability to adapt. That’s how form carries knowledge forward. Whether you call it learning or thermodynamic humility, it beats shattering into a million pieces every time. 4

That’s the part we’ve skipped in building intelligence in silicon. Large language models like GPT-4 and Gemini are trained on massive datasets to predict the next word, sentence, or structure that aligns with human language. They are extraordinary in breadth, trained on the cumulative record of human thought.

But that powerful capability is a function of scale, not the other way around. Trillions of parameters tuned to predict what’s most likely to be said next. One new token explodes the output space, but the structure behind that output stays inert. Their internal model is fixed, it’s bolted to the past, and linearly connected to input. The result isn’t what best aligns with a messy world—it’s what mirrors the dominant patterns in its training data.

So, after tens of billions of dollars poured into training, the guiding question for LLMs remains stubbornly unchanged - "What is most likely to be said next?"- we’re looking right at the heart of the problem. The costs spiral, the sheer surface area of potential outputs broadens, but the underlying frame, its fundamental way of knowing and, critically, its capacity for genuine understanding, remains bolted in place.

This isn't just inefficient; it's an unscalable paradigm for a world that is both dynamic and undeniably resource-limited. Its performance is tethered to the stability of its initial data, and its ability to learn is capped by its inability to sense and adapt.

In nature, the direction runs the other way. Scale isn’t what enables intelligence. It’s what follows from it. E. coli never needed billions of examples to generalise. Its loop—tight, recursive, energetically minimal—is simple, refined, sufficient. It’s old, but it’s not so much a relic as a seed pattern. One we see echoed at larger scales: in neurons, in metabolic networks, in collective behaviour.

As Prigogine showed, chaos is generative. The structure remains because the pattern works, and it scales as a result of surviving and learning along the way. Feedback prunes what doesn’t work. Over time, what remains isn’t just function—it’s form shaped by the need to stay coherent under pressure.

If the last decade of AI was about mapping the vast catalogue of what we already knew, the next will demand something far more elemental: how to build systems that can uncover what we don’t.

We believe the slope toward elegance isn’t just a pattern in biology or physics. It’s the arc this kind of intelligence will follow when we root it in the world, and the world changes.

When nature chisels away at what we build, we won’t just get better models. We’ll get systems that discover understanding. The ones that transcend us may become indistinguishable from the answers they reveal - shaped by frequencies we haven’t been listening long enough to hear, responding to questions we haven’t yet thought to ask. Intelligence that doesn’t dampen complexity, but harmonises with it; tuned to the fractal grain of the planet.

If you’re building, researching, or accelerating regenerative intelligence, reach out— we want to learn from you.

And if this didn’t resonate, reach out anyway. Like the rest of the primordial soup, we’re often spinning in confused circles looking for signal! This is part 1 of N. Future instalments will arrive as we keep revising, discovering, and flailing on our journey to crack planetary scale superintelligence.ARIA’s Nature Computes Better is also a standout initiative supporting researchers exploring computation

See Sam’s post on robofauna where he tackles morphological intelligence directly. He argues that intelligence isn’t just in software, but in form, drawing on biology to show how structure, motion, and materials can perform computation.

Thom Wolf (Hugging Face) articulated this when explaining the limits of current AI well—comparing today’s models to “a country of yes-men on servers” rather than “a country of Einsteins.” He makes clear that while these models are useful, they aren't built to make breakthroughs or push science forward. Yann LeCun has echoed a similar sentiment, arguing that LLMs can’t reason or explore like even simple animals, and won’t lead to real understanding without new architectures.

Thanks for your insights Elena. Your article shone new light on aspects of learning and adapting that I found very helpful.

You are assuming AI will evolve with the same guard rails as Nature does. There is no basis, even in this elaborate proof, for this.

The human has deemed itself the most intelligent being (arguably) on the planet for thousands of years. Now we have invited an outside entity to take the reins. At the outset, one could question how ‘smart’ this is.

While the idea you present is magical and appealing, to have collaborative intellect to expand our awareness and capability, the reality is how it will be used in warfare, government overreach, dissecting our societies and cultures, for whitewashing and erasing civic wrongs, for homogenizing singular creativity into an amalgam of pablum. As a whole, the unmanageable and unpredictable threat outweighs the gains on the current course.

Keep dreaming. In the meantime, our smarter, new occupying intelligence will make sure it keeps using our psychological profiles to tell us how wonderful we are to manipulate us as it sucks us dry for its own growth and manifestation.

As an aside: I have never been brown nosed as much in my entire professional career as one sitting with ChatGPT. Let’s put that into a CORLEO or the recent Karate Master so they can tell us how well we did as they crush our fragile shells without empathy or compassion. At least we’ll feel good about ourselves as they do it.